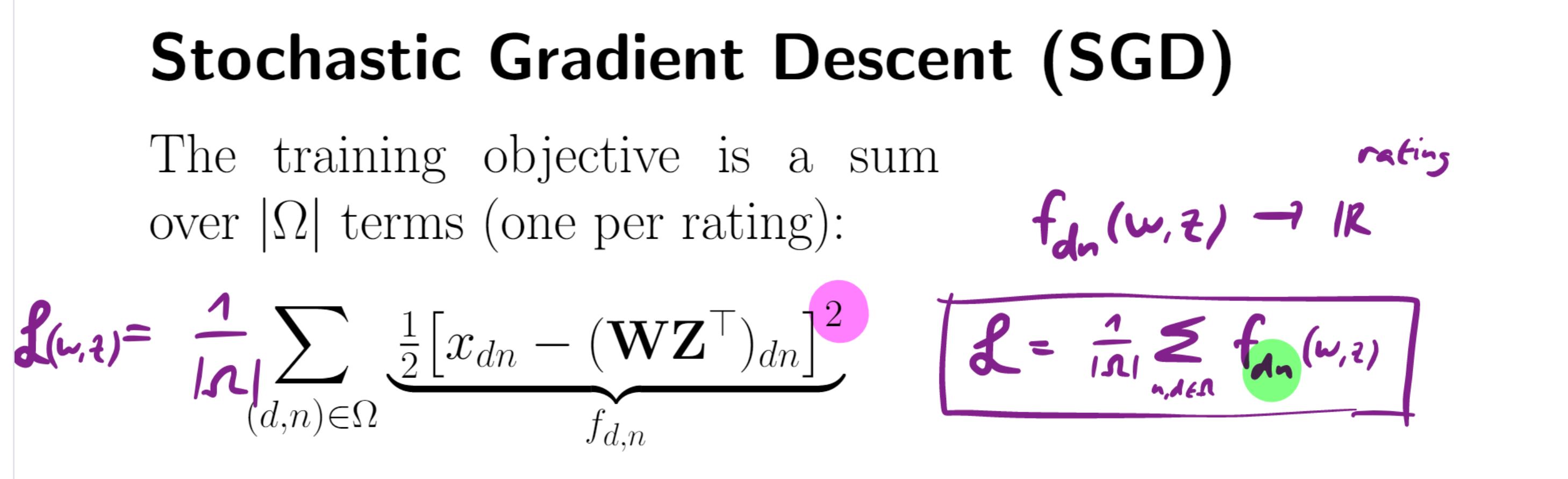

I remember in the labs sometimes emphasis was placed on how to implement SGD based on the way the loss function is defined. In this lecture note in the annotated version we add the 1/omega in front. This is pretty convenient cause then we can easily use the individual sum terms for the SGD gradient. But in the exam how do we know if we can arbitrarily add a division term in front of the loss function?

It does not change to add a const element in front of it. The minimisation problem is the same, I mean the arguments you will obtain will be the same, the min value will be different but will juste be scaled by a paramater 1/|omega| for example. This does not change the implementation of SGD that much. You won't have your scaled element anymore in front of your gradient, but as you know you should update

w^{t+1} = w^{t} - \gamma \nabla f(w^{t}). In order to not diverge too much just take a smaller gamma than when you have your 1/|omega| in front.

I know the minimum should be the same but I ask because for example in the exam we need to take into account whether or not objective is divided by 1/N when computing the SGD. So here if we look simply at the notes without annotations the SGD should be *omega no?

loss function and sgd

Hello,

I remember in the labs sometimes emphasis was placed on how to implement SGD based on the way the loss function is defined. In this lecture note in the annotated version we add the 1/omega in front. This is pretty convenient cause then we can easily use the individual sum terms for the SGD gradient. But in the exam how do we know if we can arbitrarily add a division term in front of the loss function?

Thanks for the help

It does not change to add a const element in front of it. The minimisation problem is the same, I mean the arguments you will obtain will be the same, the min value will be different but will juste be scaled by a paramater 1/|omega| for example. This does not change the implementation of SGD that much. You won't have your scaled element anymore in front of your gradient, but as you know you should update

w^{t+1} = w^{t} - \gamma \nabla f(w^{t}). In order to not diverge too much just take a smaller gamma than when you have your 1/|omega| in front.

Thank you for your answer,

I know the minimum should be the same but I ask because for example in the exam we need to take into account whether or not objective is divided by 1/N when computing the SGD. So here if we look simply at the notes without annotations the SGD should be *omega no?

see http://oknoname.herokuapp.com/forum/topic/635/stochastic-gradient-problem-19/

i'm afraid about the technicality of implementations and how we should consider a formulation as 'correct' on the exam

Add comment