Randomised Smoothing: Upper bound on allowed perturbation

I was wondering what is the relationship between the maximum scale of the adversarial perturbation under which we can still

expect to get a robust prediction ($$ \epsilon $$) and the noise variance considered in a smoothed classifier ($$ \sigma $$) in higher dimensions.

The lecture script derives the expression $$ Q^{-1}(\frac{1-p}{\sigma})$$ as the maximum distance a point can be moved away from the original feature to still get a correct predition in a simple 1-dimensional example .

How does this translate to a classification problem in multiple dimensions? Is there another expression to describe the relationship between $$\epsilon$$ and $$\sigma$$ other than a "not too large $$ \epsilon $$?

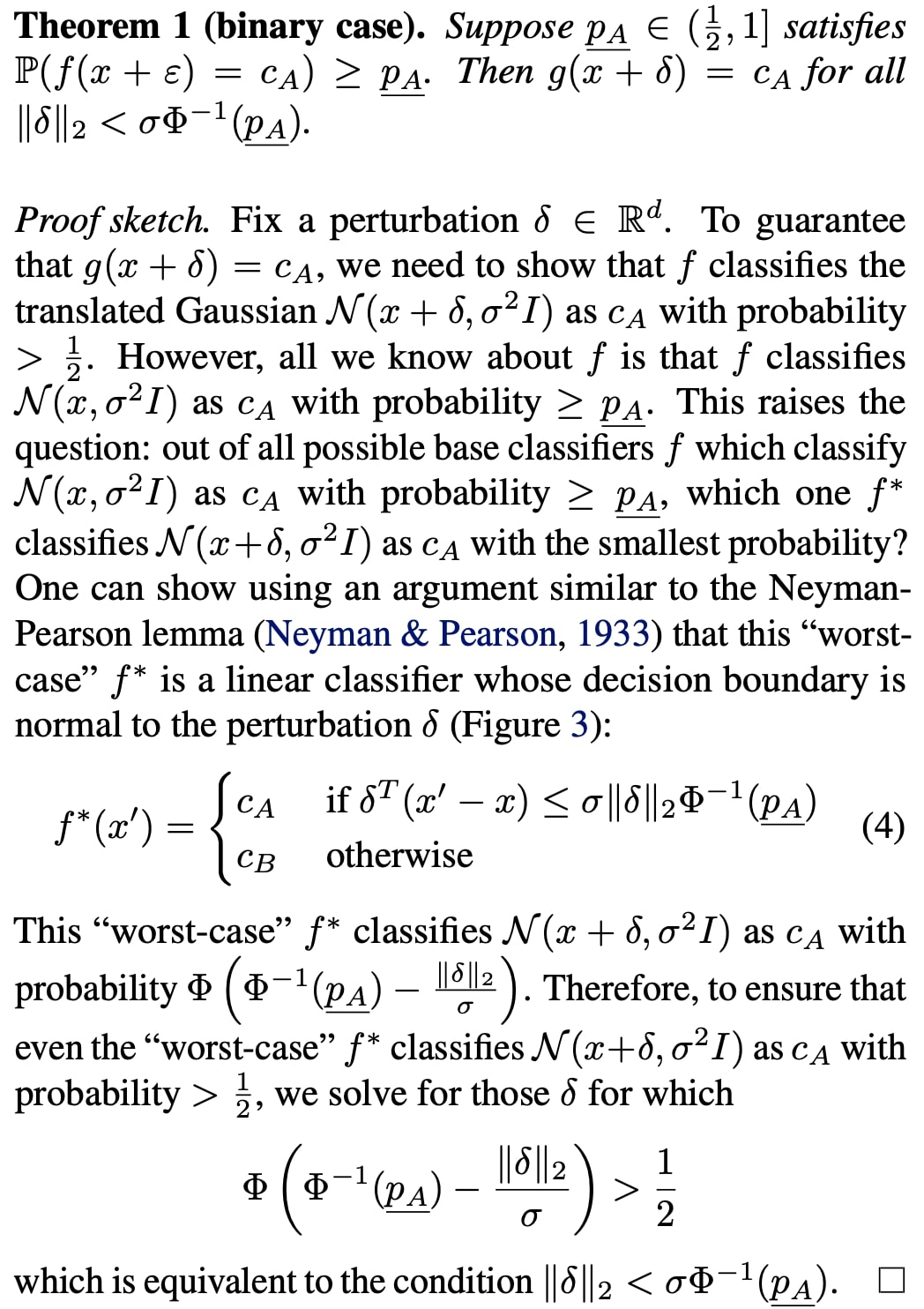

I think the best reference on this topic is Certified Adversarial Robustness via Randomized Smoothing. In particular, what you are interested in is shown in Theorem 1 (binary case -- for simplicity). For an arbitrary input dimension \(d\) it establishes the following robustness guarantee: if the smoothed prediction on point \(x\) is at least \(\underline{p_A}\), then we can perturb \(x\) by any vector \(\delta\) with norm \(||\delta||_2 < \sigma \Phi^{-1}(\underline{p_A})\) without changing the original prediction.

Here is the full statement of the theorem and its short proof:

Randomised Smoothing: Upper bound on allowed perturbation

I was wondering what is the relationship between the maximum scale of the adversarial perturbation under which we can still

expect to get a robust prediction ($$ \epsilon $$) and the noise variance considered in a smoothed classifier ($$ \sigma $$) in higher dimensions.

The lecture script derives the expression $$ Q^{-1}(\frac{1-p}{\sigma})$$ as the maximum distance a point can be moved away from the original feature to still get a correct predition in a simple 1-dimensional example .

How does this translate to a classification problem in multiple dimensions? Is there another expression to describe the relationship between $$\epsilon$$ and $$\sigma$$ other than a "not too large $$ \epsilon $$?

Excellent question!

I think the best reference on this topic is Certified Adversarial Robustness via Randomized Smoothing. In particular, what you are interested in is shown in Theorem 1 (binary case -- for simplicity). For an arbitrary input dimension \(d\) it establishes the following robustness guarantee: if the smoothed prediction on point \(x\) is at least \(\underline{p_A}\), then we can perturb \(x\) by any vector \(\delta\) with norm \(||\delta||_2 < \sigma \Phi^{-1}(\underline{p_A})\) without changing the original prediction.

Here is the full statement of the theorem and its short proof:

Does this answer your question?

3

Yes. That answers my question on the point :)

Add comment