Hello,

In the question below for me we have that ALS has a cost O(NKD) and SGD has a cost O(K) per iteration.

But I don't see why b) is correct because ALS does not have any iteration, you compute all in once.

SGD for least squares is linear in D

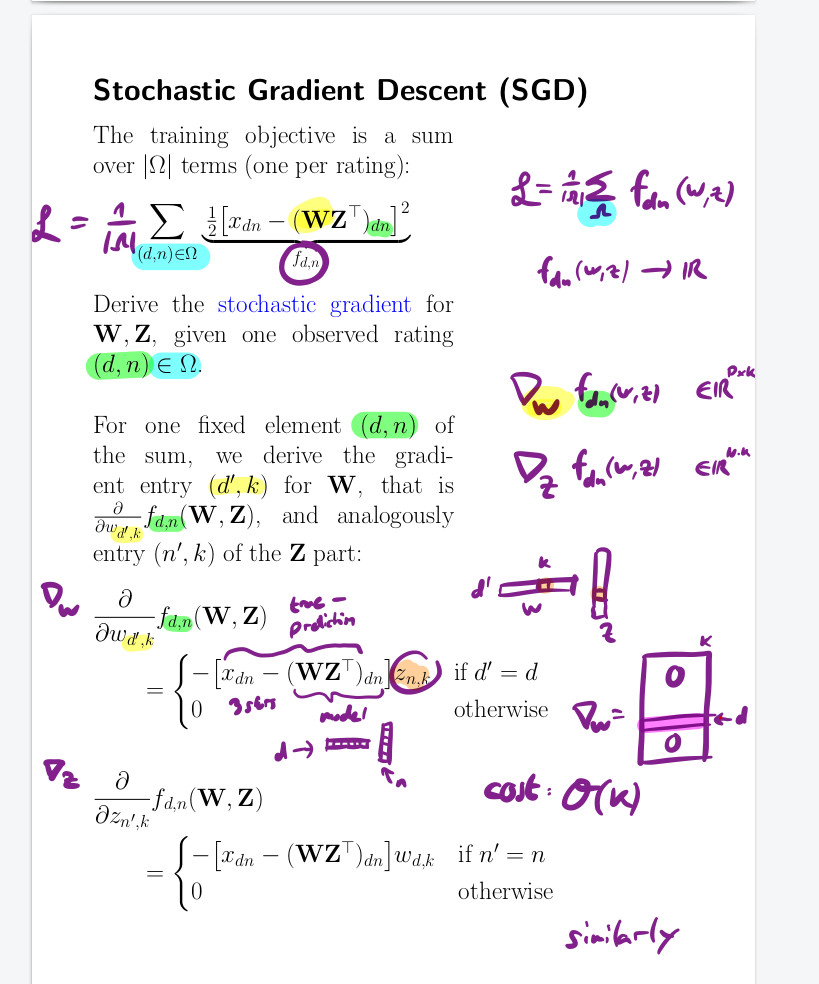

But this question ask SGD for matrix factorisation, in this case we derive a gradient with n and d fixed.

The only iteration is on features K so a SGD step for matrix factorisation is 0(K)

note that ALS is also an iterative algorithm. It starts from some initial point W, Z (in the notation of the lecture 13) and performs alternating minimisation steps on W and Z many times. See also ex13 in the course, last task about implementing ALS.

SGD for least squares is linear in D

But this question ask SGD for matrix factorisation, in this case we derive a gradient with n and d fixed.

The only iteration is on features K so a SGD step for matrix factorisation is 0(K)

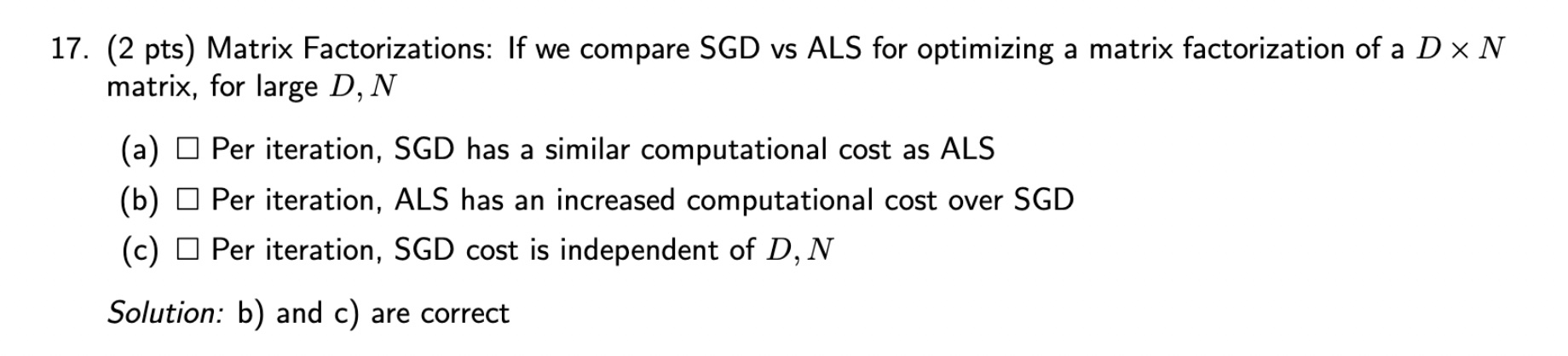

Q17 exam 2016

Hello,

In the question below for me we have that ALS has a cost O(NKD) and SGD has a cost O(K) per iteration.

But I don't see why b) is correct because ALS does not have any iteration, you compute all in once.

1

SGD for least squares is linear in D

But this question ask SGD for matrix factorisation, in this case we derive a gradient with n and d fixed.

The only iteration is on features K so a SGD step for matrix factorisation is 0(K)

see this :

4

Hi,

note that ALS is also an iterative algorithm. It starts from some initial point W, Z (in the notation of the lecture 13) and performs alternating minimisation steps on W and Z many times. See also ex13 in the course, last task about implementing ALS.

2

Ok, thank you!! And at each iteration of ALS, we are agree that the cost is O(KND)? And at each iteration of SGD, the cost is O(K)?

Wouldn't be the cost of SGD be O(D) ?

My notes for the following costs:

GD = ND

SGD = D

Least squares = N D^2 D^3

GMM = D^2 * K

KNN = NDK

ALS = NDK

1

I am also confused about this question. Since the cost of SGD is O(D) per iteration, i.e., dependent on the D. Why (c) is correct?

1

SGD for least squares is linear in D

But this question ask SGD for matrix factorisation, in this case we derive a gradient with n and d fixed.

The only iteration is on features K so a SGD step for matrix factorisation is 0(K)

see this :

4

Add comment