Using the Hessian to prove that the loss function is convex

Hi, I have a question regarding the use of the Hessian (H) to prove that the loss function for logistic regression is convex.

At the end of the second lecture of week 5 we derive the Hessian to use it in the Newton method. It is also mentioned that since the diagonal entries of S are non-negative. H is non-negative definite (which I assume is the same as positive semi-definite).

Why do the values of S mater here ? Is it because these are the eigenvalues of H and thus H has positive eigenvalues? (If so, why are these the eigenvalues of H ?)

I think the following classical result from calculus is what you are searching for : "A twice differentiable function of several variables is convex on a convex set if and only if its Hessian matrix of second partial derivatives is positive semidefinite on the interior of the convex set."

Using the Hessian to prove that the loss function is convex

Hi, I have a question regarding the use of the Hessian (H) to prove that the loss function for logistic regression is convex.

At the end of the second lecture of week 5 we derive the Hessian to use it in the Newton method. It is also mentioned that since the diagonal entries of S are non-negative. H is non-negative definite (which I assume is the same as positive semi-definite).

Why do the values of S mater here ? Is it because these are the eigenvalues of H and thus H has positive eigenvalues? (If so, why are these the eigenvalues of H ?)

Best regards and thank you in advance

I think the following classical result from calculus is what you are searching for : "A twice differentiable function of several variables is convex on a convex set if and only if its Hessian matrix of second partial derivatives is positive semidefinite on the interior of the convex set."

Thank you for your answer, I get that part.

More specifically my question would be: how can you see from:

That H is positive semi-definite ?

Thank you in advance,

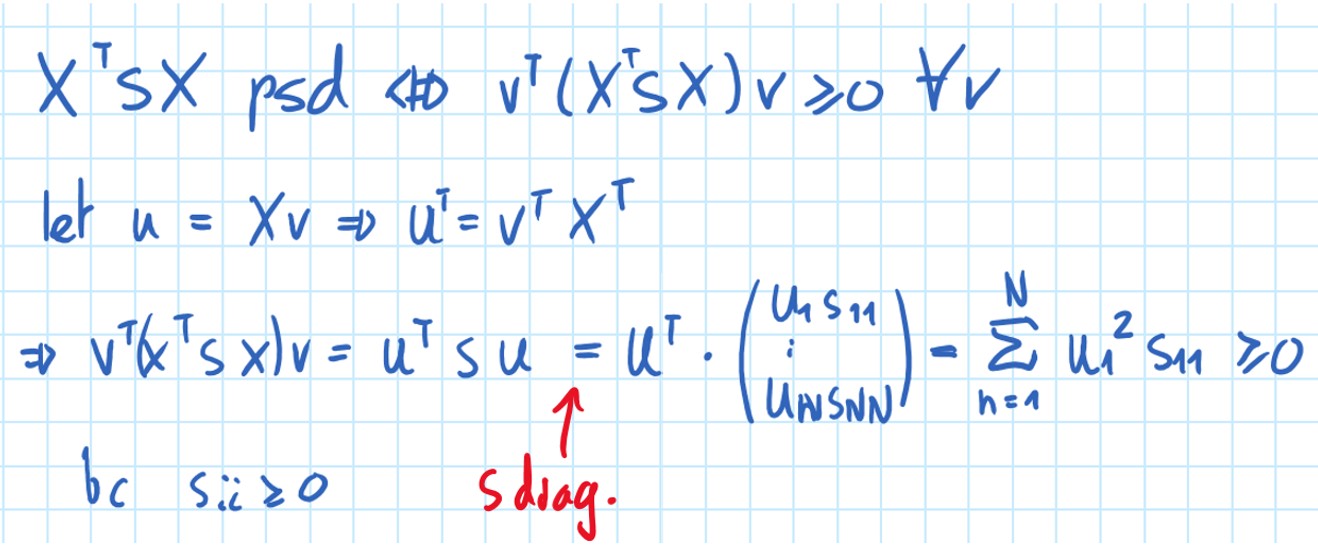

This is because for any X, (X^T X) is positive semidefinite. Since S is a diagonal matrix and all its entries are non-negative, (X^T S X) is also psd.

Clearer answer:

4

Thank you so much, it makes sense now!

Add comment