Hello, in lecture 4 when explaining the upper bound on the true error, I don't understand the role that the number of models 'K' plays. Why is it included in the expression and why exactly does the number of models we test affect this bound?

One more thing I didn't quite understand was in the bias variance decomposition, why did we introduce the S'_train term, was this just to obtain the bias and variance components or is there some more fundamental reason that I am missing?

Hello! I'd like to add a question to this question.

In particular, in the last passages reported from the lecture notes 4b, I didn't really understand what are the reasons behind the passage (c), from the second-last to the last row.

Thanks in advance for your answers!

Disclaimer: I'm a student that just re-read week 4 classes, so take what I say with a grain of salt :D.

I don't understand the role that the number of models 'K' plays. Why is it included in the expression and why exactly does the number of models we test affect this bound?

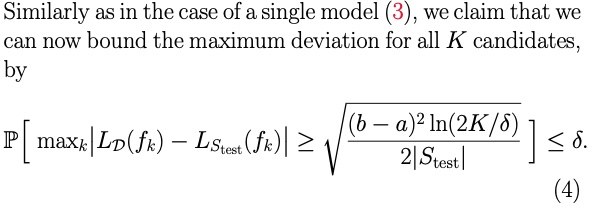

It is explained a bit later in the pdf, although it's a bit cryptic at first:

"For a general K, if we check the deviations for K models and ask for the probability that for at least one such model, we get a deviation of at least ε then by the union bound this probability is at most K times as large as in the case where we are only concerned with a single instance. I.e., the upper bound becomes \(2Ke^{−2|S_{test}|ε^2/(b−a)^2}\)

In formula (4), if you are "expanding" that max_k for each model, you would have something that looks like this:

(Important to note: I'm using the formulation that you see in lemma 0.1 with the ε and the upper bound as an exponential)

You can split those unions and summing the probabilities, and that quantity will be bounded by

\(k \cdot 2Ke^{−2|S_{test}|ε^2/(b−a)^2}\)

Hopefully, that makes sense to you...

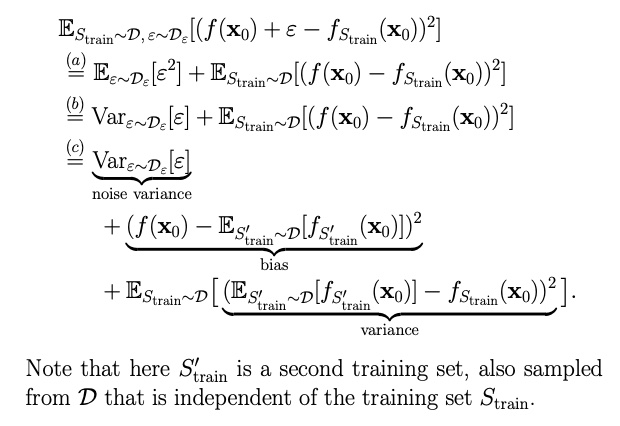

why did we introduce the S'_train term, was this just to obtain the bias and variance components or is there some more fundamental reason that I am missing?

I understood it this way: to know the variance of your model, you need to test it with two separate datasets, and see whether you have a big difference between the two.

But indeed, maybe it's just a math artifact to make the decomposition happen...

I didn't really understand what are the reasons behind the passage (c), from the second-last to the last row.

The trick they used is to add and subtract the \(E_{S_{train}}\) INSIDE the square in (b). From there, you expand the square (you need to group the terms two by two, the minus \(E_{S_{train}}\) goes on the left, and the plus \(E_{S_{train}}\) on the right), drop the middle term, and you obtain (c).

Question regarding generalization error

Hello, in lecture 4 when explaining the upper bound on the true error, I don't understand the role that the number of models 'K' plays. Why is it included in the expression and why exactly does the number of models we test affect this bound?

One more thing I didn't quite understand was in the bias variance decomposition, why did we introduce the S'_train term, was this just to obtain the bias and variance components or is there some more fundamental reason that I am missing?

Thanks in advance for your time!

3

Hello! I'd like to add a question to this question.

In particular, in the last passages reported from the lecture notes 4b, I didn't really understand what are the reasons behind the passage (c), from the second-last to the last row.

Thanks in advance for your answers!

1

Hey there,

Disclaimer: I'm a student that just re-read week 4 classes, so take what I say with a grain of salt :D.

It is explained a bit later in the pdf, although it's a bit cryptic at first:

"For a general K, if we check the deviations for K models and ask for the probability that for at least one such model, we get a deviation of at least ε then by the union bound this probability is at most K times as large as in the case where we are only concerned with a single instance. I.e., the upper bound becomes \(2Ke^{−2|S_{test}|ε^2/(b−a)^2}\)

In formula (4), if you are "expanding" that max_k for each model, you would have something that looks like this:

\(p[L_D(f_1)\gtε \cup L_D(f_1)\gtε \cup ... \cup L_D(f_k)\gtε] < ...\)

(Important to note: I'm using the formulation that you see in lemma 0.1 with the ε and the upper bound as an exponential)

You can split those unions and summing the probabilities, and that quantity will be bounded by

\(k \cdot 2Ke^{−2|S_{test}|ε^2/(b−a)^2}\)

Hopefully, that makes sense to you...

I understood it this way: to know the variance of your model, you need to test it with two separate datasets, and see whether you have a big difference between the two.

But indeed, maybe it's just a math artifact to make the decomposition happen...

The trick they used is to add and subtract the \(E_{S_{train}}\) INSIDE the square in (b). From there, you expand the square (you need to group the terms two by two, the minus \(E_{S_{train}}\) goes on the left, and the plus \(E_{S_{train}}\) on the right), drop the middle term, and you obtain (c).

2

Add comment