After checking the solution of Lab07 and the lecture slide, I still feel puzzled about Coordinate Descent (Ascent) for SVM, i.e., question 2 for the code. I am wondering what the update formulas are for \alpha and w, because I didn't find them on the slide. Specifically, why \alpha is updated like: alpha[n] = min(max(old_alpha_n + lambda_ * g / (x_n.T.dot(x_n)), 0.0), 1.0) and w is updated like: w += 1.0 / lambda_ * (alpha[n] - old_alpha_n) * y_n * x_n

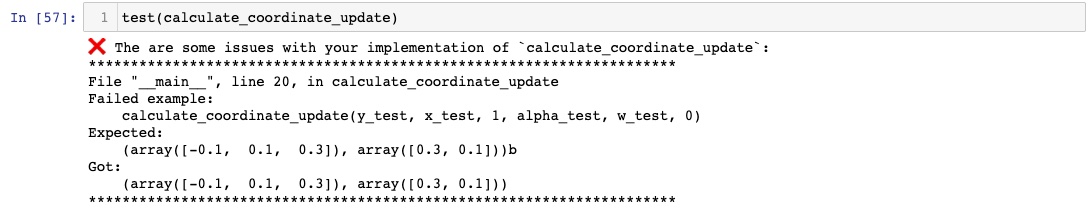

Besides, what does the b stands for in the test case?

I remembered struggling on this part as well. I took time to cleanly differentiate \(f(\alpha +\gamma e_n) \) wrt \(\gamma \) and finally found something that passes the test. I hope it is completely correct though. I think the correction just pushed the optimization at maximum making it look unclear (like that the fact that they calculate g in 2 times to avoid doing unnecessary computations before branching. By the way, I just realized that this if statement only makes sense in their version also for optimization since the result would be correct without it).

The update of alpha is straightforward then, you update the nth value with clamping constraint [0,1] such as described in the equation (3).

For w, it is the optimized way of updating the weights. You could also do it the old way with w = 1/lambda_ * X.T @ np.diag(y) @ alpha . The idea here is that you take only the difference in the update of alpha[n] (the only thing that has changed in the previous computations actually) to add it to the weights. It corresponds to the same result but avoids doing useless matrix multiplications.

Lab07 Coordinate Descent (Ascent) for SVM

Hi,

After checking the solution of Lab07 and the lecture slide, I still feel puzzled about Coordinate Descent (Ascent) for SVM, i.e., question 2 for the code. I am wondering what the update formulas are for \alpha and w, because I didn't find them on the slide. Specifically, why \alpha is updated like:

alpha[n] = min(max(old_alpha_n + lambda_ * g / (x_n.T.dot(x_n)), 0.0), 1.0)and w is updated like:w += 1.0 / lambda_ * (alpha[n] - old_alpha_n) * y_n * x_nBesides, what does the b stands for in the test case?

Thanks in advance!

I remembered struggling on this part as well. I took time to cleanly differentiate \(f(\alpha +\gamma e_n) \) wrt \(\gamma \) and finally found something that passes the test. I hope it is completely correct though. I think the correction just pushed the optimization at maximum making it look unclear (like that the fact that they calculate g in 2 times to avoid doing unnecessary computations before branching. By the way, I just realized that this if statement only makes sense in their version also for optimization since the result would be correct without it).

The update of alpha is straightforward then, you update the nth value with clamping constraint [0,1] such as described in the equation (3).

For w, it is the optimized way of updating the weights. You could also do it the old way with

w = 1/lambda_ * X.T @ np.diag(y) @ alpha. The idea here is that you take only the difference in the update of alpha[n] (the only thing that has changed in the previous computations actually) to add it to the weights. It corresponds to the same result but avoids doing useless matrix multiplications.1

Add comment