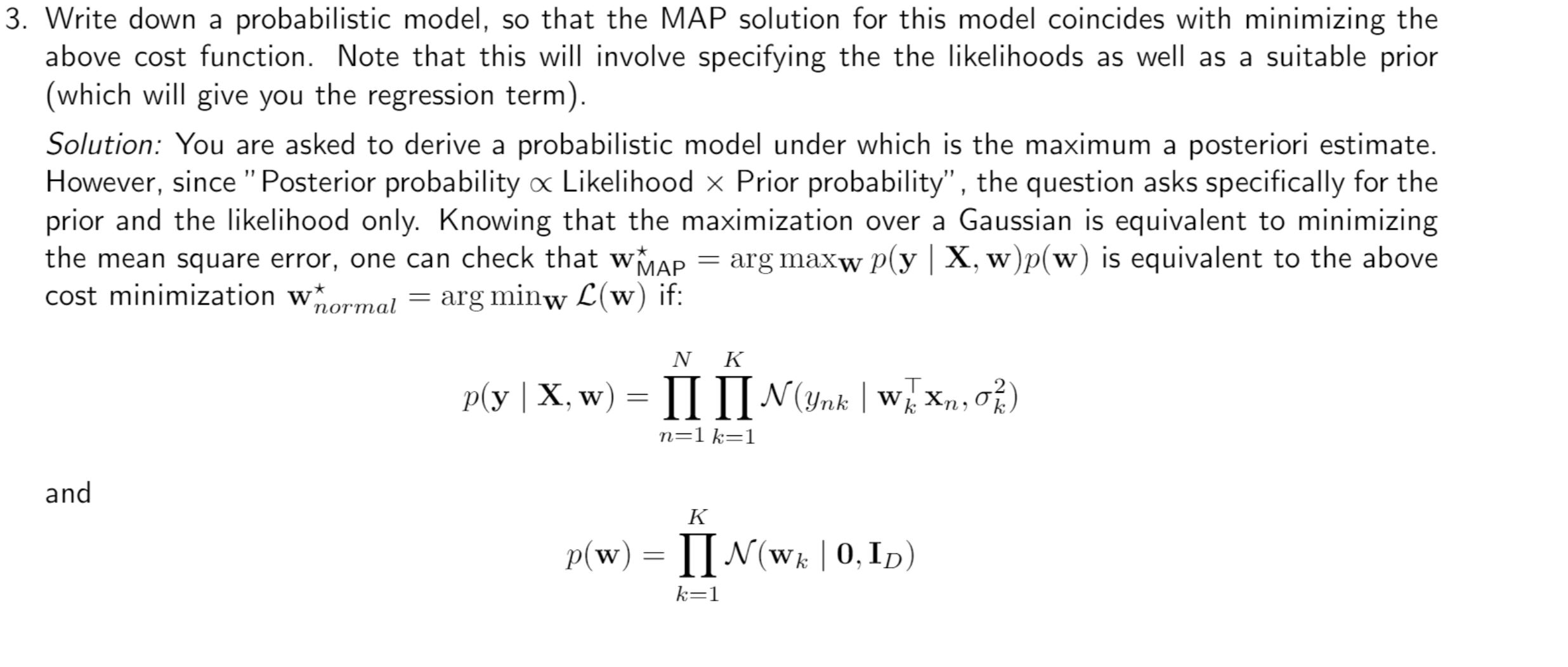

I'm pretty sure we did not cover this in the lectures this year but at the end of the Ridge Lecture there's a section (which as far as I remember was not covered in the lecture) where ridge regression is expressed as a MAP estimator

The trick is to recognize that the normal equations of that problem are very similar to the normal equations of ridge except that in ridge the identity is multiplied by λ. In the Ridge derivation, we assumed a normal prior, with 0 mean and λII (identity) variance. Since there's no lambda in the normal equations, we can assume a normal prior with 0 mean and II (identity) variance.

mock2018

Hi,

I am not sure about the reasoning behind how we reach this answer in question 2 of mock 2018. Which lecture notes would help in this scenario?

How is the probability p(w) obtained for example, why do we know it behaves as a normal with 0 mean and unit variance?

Thanks for the help

1

I believe maximum likehood lecture 3.

I'm pretty sure we did not cover this in the lectures this year but at the end of the Ridge Lecture there's a section (which as far as I remember was not covered in the lecture) where ridge regression is expressed as a MAP estimator

The trick is to recognize that the normal equations of that problem are very similar to the normal equations of ridge except that in ridge the identity is multiplied by λ. In the Ridge derivation, we assumed a normal prior, with 0 mean and λII (identity) variance. Since there's no lambda in the normal equations, we can assume a normal prior with 0 mean and II (identity) variance.

2

Add comment