I have a question regarding the problem 13 in the 2018 final exam. (image below)

If I understood correctly the points that lays exactly on the margin have a loss value of 0, since \(y_{n} x^{t} w = 1\) . So it doesn't impact the value of the loss function, thus the weights update. Then why the margin increases when we remove this points?

I’m not a TA but how I understood it is that the solution is usually very sparse (in other words there are usually only a very few support vectors compared to the amount of data) so if you remove a support vector the margin will probably increase because there will be a new point further away that will make the margin even wider.. If that makes sense?

Edit: I think the key here is that lambda is very large which restricts the complexity of the model.

If we use the dual formulation then the points exactly on the margin have an alpha between 0 and 1. With alpha greater than 0, the points are taken into account when computing the loss function or the hyperplane. That is why the margin will change. Given that the point was on the margin and what we want is to find the hyperplane with maximal spacing, then if we don't have the point on the margin, the margin can increase.

Having said that, I am still not quite sure because you are right on the fact that the points that lay exactly on the margin have a loss value of 0, and even if alpha is 0.9 we are multiplying 0.9 times 0.

@anonymous, could you explain why the key is the size of lambda? How would the results change if lambda was smaller?

I think the point is that you can now minimize the loss function including the regularization term by making the modulus of the weights smaller without incurring a higher classification error, i.e. you can increase the margin without making any wrong predictions (as we have just removed the support vectors) but still reducing your regularization error. Note that the function being optimized above includes the L2 term.

"@anonymous why removing a point in the margin implies that w gets smaller ?"

We remove a point in the margin

We can therefore make the margin bigger

The width of the margin is equal to 2/||w||, therefore increasing the margin actually means making the modulus of the weights smaller (||w||).

Notice that this also decreases the loss function. Especially because of the very large value of lambda that makes the regularization term have more weight than the wrong predictions term.

I'm guessing that maybe the hyperplane could rotate and increase even if there are other points on the margin. However, you are completely right on the fact that there might be cases in which the margin does not increase. For example, if there is a point next to that point on the margin.

Nevertheless, the question asks for the most likely scenario and I guess we should assume that the most likely scenario is that there are no duplicate points or that the margin is not completely covered with points.

Hello, I have question about this task.

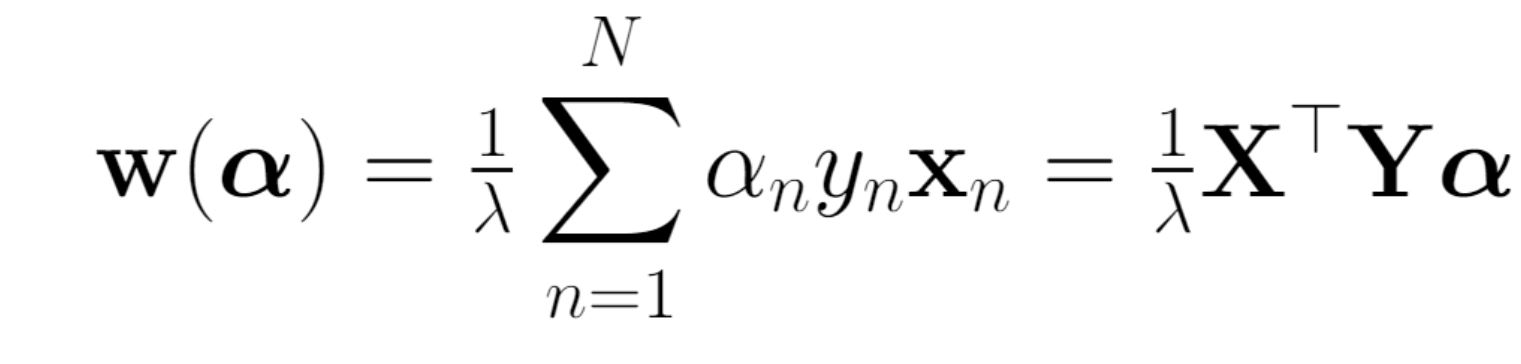

We have such formula for w

So omega depends on the values of alpha. But if the point lies exactly on the boundary of margin, then the alpha can be from 0 to 1 inclusive. If alpha is set to 0, this point doesn't matter for w, and nothing will change if it is removed. But if alpha is taken to be non-zero, then after removing this point, w will change. It seems to come out somewhat ambivalent.

Exam - SVM

Hi,

I have a question regarding the problem 13 in the 2018 final exam. (image below)

If I understood correctly the points that lays exactly on the margin have a loss value of 0, since \(y_{n} x^{t} w = 1\) . So it doesn't impact the value of the loss function, thus the weights update. Then why the margin increases when we remove this points?

Thanks

1

I’m not a TA but how I understood it is that the solution is usually very sparse (in other words there are usually only a very few support vectors compared to the amount of data) so if you remove a support vector the margin will probably increase because there will be a new point further away that will make the margin even wider.. If that makes sense?

Edit: I think the key here is that lambda is very large which restricts the complexity of the model.

1

If we use the dual formulation then the points exactly on the margin have an alpha between 0 and 1. With alpha greater than 0, the points are taken into account when computing the loss function or the hyperplane. That is why the margin will change. Given that the point was on the margin and what we want is to find the hyperplane with maximal spacing, then if we don't have the point on the margin, the margin can increase.

Having said that, I am still not quite sure because you are right on the fact that the points that lay exactly on the margin have a loss value of 0, and even if alpha is 0.9 we are multiplying 0.9 times 0.

@anonymous, could you explain why the key is the size of lambda? How would the results change if lambda was smaller?

1

I agree with you and would love to get more explanation about your thoughts @anonymous1

1

I think the point is that you can now minimize the loss function including the regularization term by making the modulus of the weights smaller without incurring a higher classification error, i.e. you can increase the margin without making any wrong predictions (as we have just removed the support vectors) but still reducing your regularization error. Note that the function being optimized above includes the L2 term.

1

Adding to the last comment, the total width of the margin is equal to 2/||w||. Thus, if ||w|| is smaller, the total width of the margin will increase.

@anonymous why removing a point in the margin implies that w gets smaller ?

1

"@anonymous why removing a point in the margin implies that w gets smaller ?"

1

OK thanks for the clear explanation. Is it still true if we have more than one point in the margin?

1

Good point!!

I'm guessing that maybe the hyperplane could rotate and increase even if there are other points on the margin. However, you are completely right on the fact that there might be cases in which the margin does not increase. For example, if there is a point next to that point on the margin.

Nevertheless, the question asks for the most likely scenario and I guess we should assume that the most likely scenario is that there are no duplicate points or that the margin is not completely covered with points.

1

Alright ! Thanks :)

1

Hello, I have question about this task.

We have such formula for w

So omega depends on the values of alpha. But if the point lies exactly on the boundary of margin, then the alpha can be from 0 to 1 inclusive. If alpha is set to 0, this point doesn't matter for w, and nothing will change if it is removed. But if alpha is taken to be non-zero, then after removing this point, w will change. It seems to come out somewhat ambivalent.

Add comment